In client engagements, I am seeing growing interest in what Trisotech calls Business Automation as a Service. I am seeing it particularly in financial services, but I expect it applies in health care and other verticals as well. Financial services, for so long reliant on legacy applications, is now racing to create new cloud-based apps built on modern architecture, where business automation services built with BPMN and DMN are a great fit. This post will explain why, and how it’s done.

The pattern I am seeing starts with software product idea from a subject matter expert. How the software is supposed to work is expressed as a collection of Excel tables that illustrate table updates in response to various business events. The goal is to create a web application that replaces Excel with SQL database tables and automates the table update logic based on attributes of each event in combination with the existing tables.

Examples I have worked on range from loan underwriting to accounting. The client is typically a startup or a service provider getting started as a software provider. In the early stages their programming resources are thin and focused on the more conventional aspects of web application development: database views and reports, analytics, administrative functions, and the occasional manual update of the tables.

The core IP is focused on the automated table updates. BPMN and DMN play a key role there because numerous details of the logic typically remain to be worked out. Excel is great for creating examples, but when you need to generalize the logic for automation in response to any possible event, you often find you need to tweak the table structure or even add new tables. That's why creating a spec for developers at this stage is a losing battle.

With BPMN and DMN - at least as implemented by Trisotech - the subject matter experts can create the executable logic themselves. If you can create complex models in Excel, you can create automated versions of those models and make the necessary adjustments yourself without getting in line for developer resources. Moreover, those automated models can be deployed as cloud services called by the "conventional" parts of the web app.

For example, I am currently working with a client developing an accounting app for companies that buy, sell, and hold a variety of financial assets: loans, securities, and such. Business events received from the trading system, in combination with time-based events such as accumulated interest and "mark-to-market" revaluation, result in table updates for each such asset, and these tables ultimately are rolled up into the company's financial statements. If it sounds complicated, trust me, it is.

The solution method that I have found to work well in these situations is based on three basic elements:

- A short-running straight-through BPMN service for automating the sequence of data-gathering, decision invocation, and inserting new table records.

- A DMN-based decision service for creating new table records.

- A data gateway based on OData for virtualizing the database and creating REST services for all table update operations.

OData is an OASIS standard for cloud-enabling databases. I wrote about it in a recent post. OData automatically creates an XML metadata file equivalent to an OpenAPI (Swagger) file normally used to define REST APIs. Upon import of that file, Trisotech instantly exposes to BPMN and DMN Create, Retrieve, Update, and Delete (CRUD) operations for all the tables and converts the datatypes used in these operations to their FEEL equivalents. OData's value here is that it allows subject matter experts to modify the table structures and instantly regenerate the REST APIs for all table operations. This is critical when the tables are not finalized and locked down. With Swagger, my experience has been that if you need to wait for the developers to modify the REST APIs, it's hopeless. By then, things have changed again.

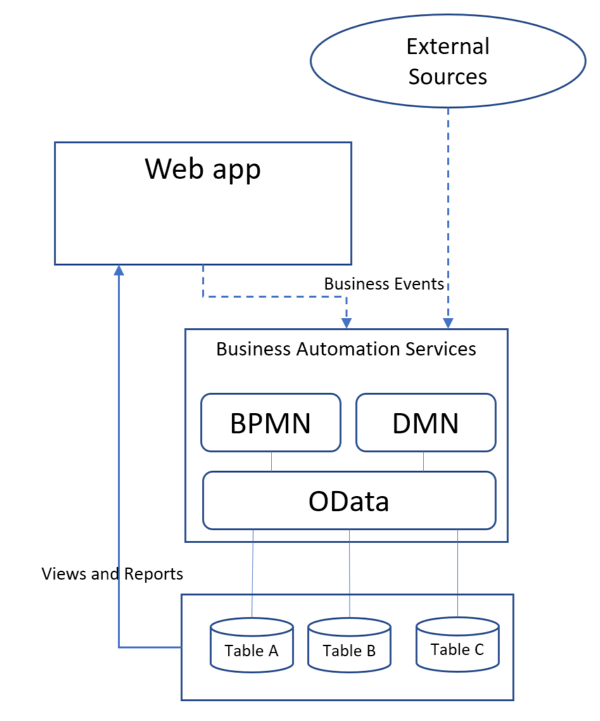

Below you see the basic application architecture:

Business Automation Services receives business events from both external sources and the web app. Each business event triggers a BPMN service that uses OData to retrieve table data, calls a DMN service to generate new table rows, and then uses OData again to insert the new table rows. The web app provides views, reports, and analytics on the table data.

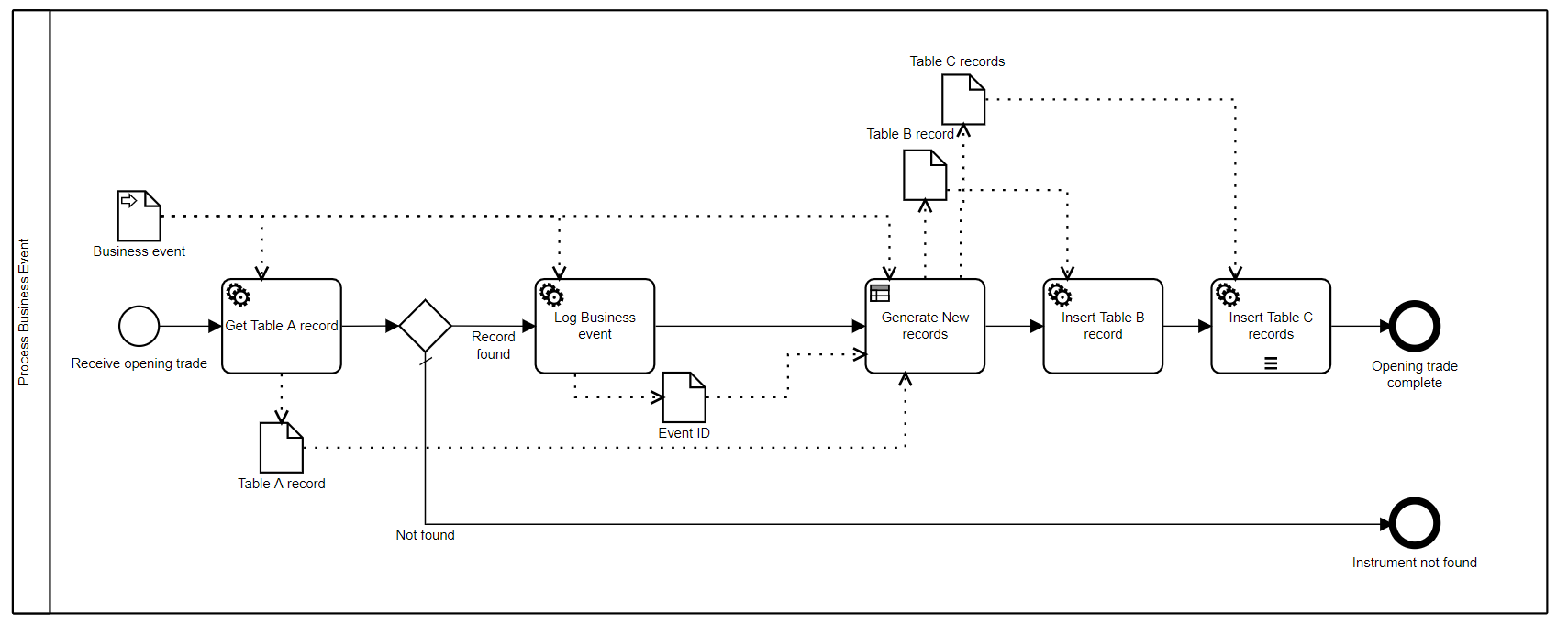

The BPMN process looks like this:

In this simplified example, the process references Table A and manages updates to Tables B and C. Upon receipt of the business event, the process first validates it by matching it to some existing record in Table A. Assuming it is found, the event is recorded in an event table. OData generates a unique ID for every record and the ID of the business event is saved as a process variable used to establish identifiers for records in Tables B and C. This example just has 3 tables, but typically there may be 6-10 or more, and logging the business event is typically followed by OData Find (query) of other tables to obtain the data needed for the decision task.

Each process includes a single decision task that generates new rows for all tables. In the process diagram you see the decision task inputs and outputs visualized as data associations from and to data objects (process variables). The decision task invokes a single DMN service in which the data input associations in the process map to the input data elements, and the output decisions in the decision model map to the data output associations in the process. Following the decision task, the decision outputs become the inputs of multiple OData Create (insert) operations. For auditability, all the table updates are inserting additional rows, not updating existing rows. OData Create inserts a single record, so in the case where multiple records are generated, we use a multi-instance service task. As we have discussed in the past, Trisotech uses FEEL boxed expressions to map between service parameters and BPMN/DMN variables, so subject matter experts can do this without programming.

Here is how it works in practice.

- We start by defining the database tables. With MySQL, for example, we use phpMyAdmin.

- From the OData gateway, we download the XML metadata file for the database. Even though DMN is not calling any services, we import the metadata file into the DMN Operation Library as a way to capture all the table datatypes at once.

- The real work is developing the DMN model, which is where the logic generalizing the Excel examples is performed. The decision model must create an output decision for any table where new rows are inserted. After testing the logic, we define a decision service specifying its inputs and outputs, and publish it to the Trisotech Cloud. The service inputs define the data that must be supplied by the process to the decision task.

- In BPMN, again we import the metadata file to the Operation Library. The OData API supports 5 operations for every table: Find (query), Get (by ID), Create (insert), Update, and Delete. Our method requires just Find and Create. In BPMN, each service task is configured to a particular table and operation, and mapped to input and output data. We discussed how to use these operations in a previous post.

- The decision task is configured by linking to a deployed decision service in the Trisotech environment, and again providing data mapping to the process variables.

- You can now publish the process to the Trisotech Cloud, at which point it is an executable REST service.

This method allows the vision for your cloud-based event-driven app to be realized quickly and demonstrated to prospective clients and investors. The only new skill you need to do it is DMN modeling to generalize and automate the Excel logic. And we have training for that! The great thing about DMN is it is designed to be used by subject matter experts... so if you can create your fintech app by example in Excel, you can learn to bring it to life using Trisotech Business Automation services.