One of the singular successes of BPM technology is a common language - BPMN - used both for process modeling and executable design. At least in theory.... In reality, the BPMN created by the business analyst to represent the business requirements for implementation often bears little resemblance to the BPMN created by the BPMS developer, which must cope with real-world details of application integration. That not only weakens the business-IT collaboration so central to BPM's promise of business agility, but it leads to BPMN that must be revised whenever any backend system is updated or changed. It doesn't have to be that way, according to an interesting new book by Volker Stiehl of SAP, called Process-Driven Applications with BPMN (www.springer.com/978-3-319-07217-3).

Process-driven applications are executable BPMN processes with these characteristics:

- Strategic to the business, not situational apps. They must be worth designing for the long term.

- Containing a mix of human and automated activities, not human-only or straight-through processing.

- Span functional and system boundaries, integrating with multiple systems of record.

- Performed (with local variations) in multiple areas of the company.

- Subject to change over time, either in business functionality or in underlying technical infrastructure, or both.

- Process-driven applications should be loosely coupled with the called back-end systems. They should be as independent as possible from the system landscape. After all, the composite does not know which systems it will actually run against.

- Process-driven applications, because of their independence, should have their own lifecycles, which ideally are separate from the lifecycles of the systems involved. It is also desirable that the versions of a composite and the versions of the called back-end systems are independent of one another. This protects a composite from version changes in the involved applications.

- Process-driven applications should work only with the data that they need to meet their business requirements. The aim is to keep the number of attributes of a business object within a composite to a minimum.

- Process-driven applications should work with a canonical data type system, which enables a loose coupling with their environment at the data type level. They intentionally abstain from reusing data types and interfaces that may already exist in the back-end systems.

- Process-driven applications should be non-invasive. They should not require any kind of adaptation or modification in the connected systems in order to use the functionality of a process-driven application. Services in the systems to be integrated should be used exactly as they are.

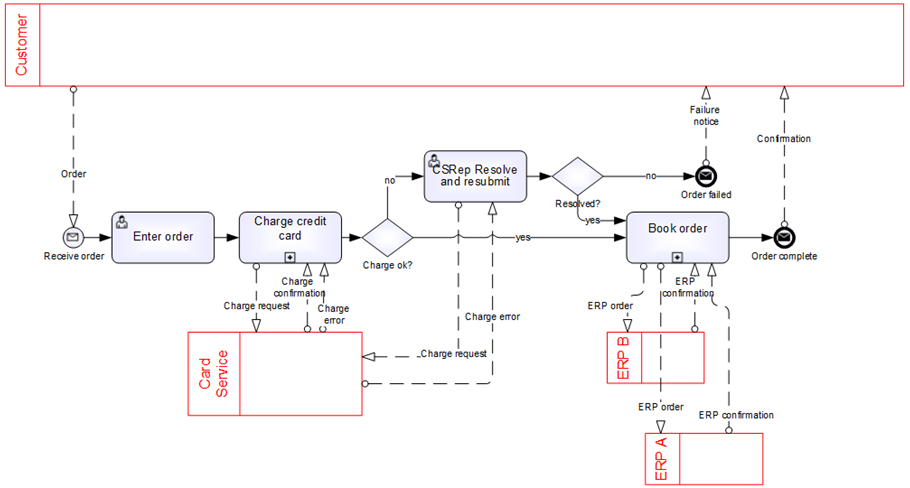

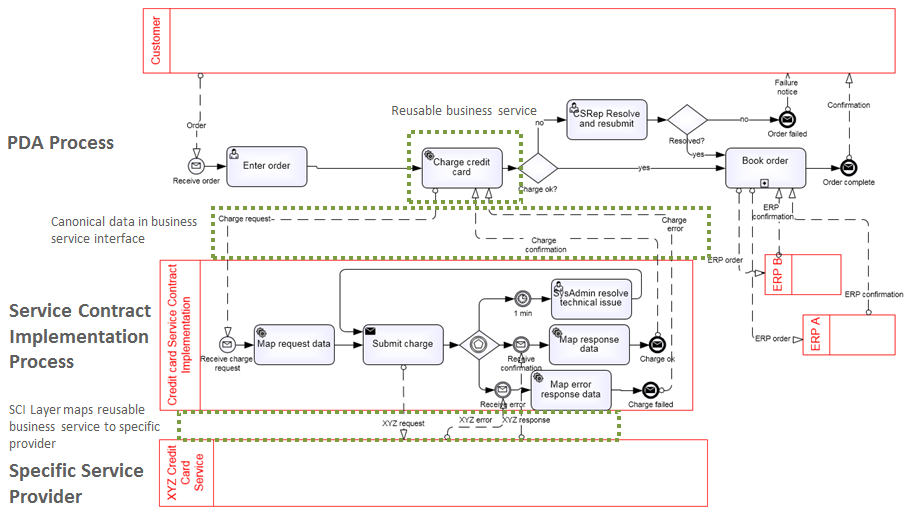

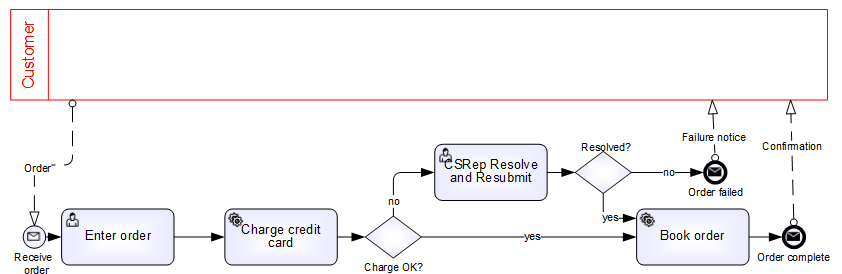

Let’s look at a very simple example, an Order Booking process. Here is the process model created by the business analyst in conjunction with the business. Upon receipt of an order from the customer, an on order entry clerk enters it into a form, from which the price is calculated. Then an automated task charges the credit card. If the charge does not succeed, a customer service rep contacts the customer to resolve the problem. Once the charge succeeds, the process books the order in the ERP system, another automated task, and ends by returning a confirmation message to the customer. If the charge fails and cannot be resolved, the process ends by sending a failure notice to the customer.

In the conventional BPMS scenario, here is the developer’s view. It looks the same except that the simple service tasks have been replaced by subprocesses, and the service providers – the credit card processing and ERP booking services – are shown as black box pools with the request and response messages visible as message flows. There are 2 reasons the service tasks were changed to subprocesses: One is to accommodate technical exception handling. What happens if the service returns a fault, or times out? Some system administrator has to intervene, fix the problem, and retry the action. The BA isn’t going to put that in the BPMN, but it needs to be in the solution somewhere. The second reason is to allow for asynchronous calls to the services, with separate send and receive steps. You also notice that Book order is interacting with more than one ERP system. Don’t you wish there was one ERP system that handled everything the customer could buy? Well sometimes there is not, so the process must determine which one to use for each instance. Actually an order could have some items booked in system A and other items booked in system B. The business stakeholders, possibly even the business analyst, may be unaware of these technical details, but the developer must be fully aware.

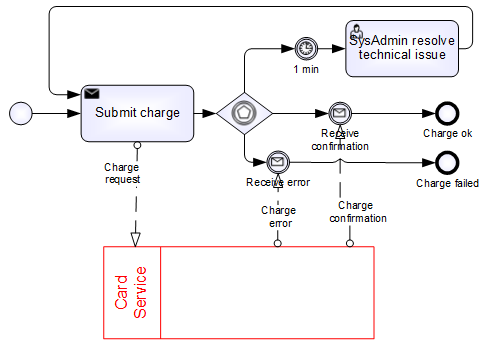

Here is the child level of Charge credit card. It is invoked asynchronously, submitting a charge request and then waiting for a response. If the service times out, an administrator must fix the issue and retry the charge. The service returns either a confirmation if the charge succeeds or an error message if it fails. Here we modeled this as two different messages; in other circumstances we might have modeled these as two different values of a single message. If you remember your Method and Style, the child level has two end states, Charge ok and Charge failed, that match up with the gateway in the parent level.

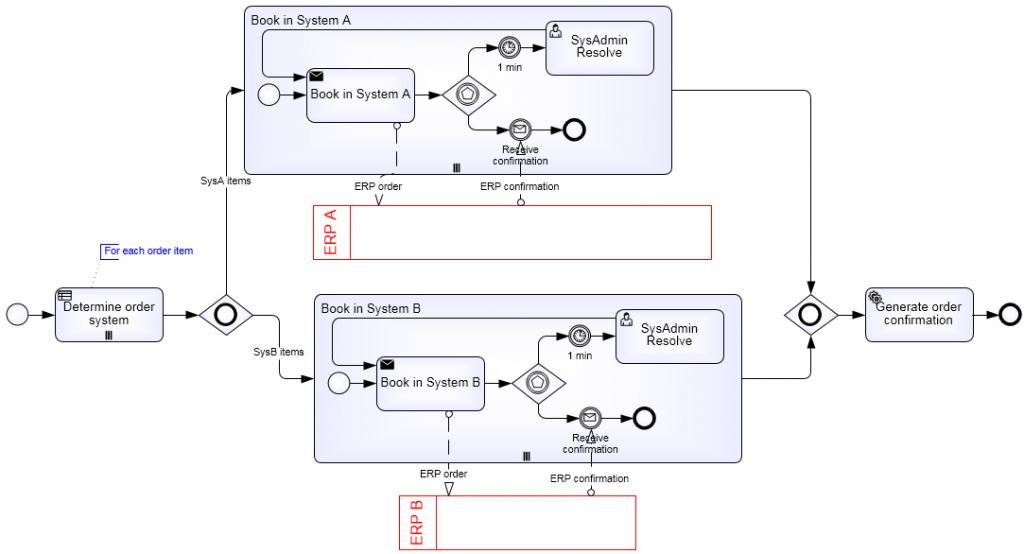

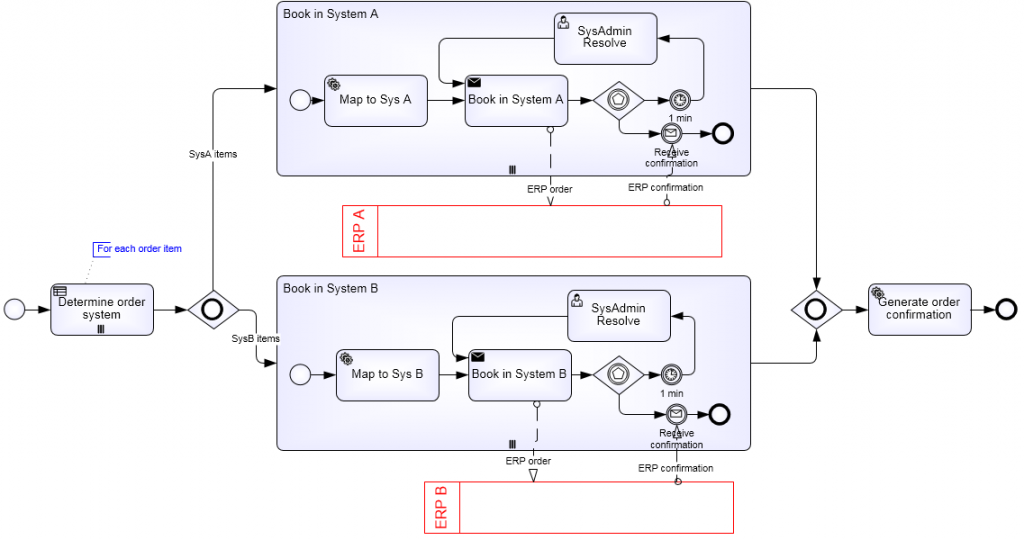

And here is the child level of Book order. A decision task needs to parse the order and for each order item determine is it handled by system A or system B. Then there are separate booking subprocesses to submit the booking request and receive the confirmation for each order item in each system. Finally an automated task consolidates all the item confirmations into an overall order confirmation.

So you already can see some of the problems with this approach. The developer’s BPMN is no longer recognizable by the business, possibly even by the BA. This reduces one of BPMN’s most important potential benefits, a common process description shared by business and IT. Second, the integration details are inside the process model. Whenever there are changes to the interface of either the credit card service, ERP system A, or system B, the process model must be changed as well. If this process is repeated in various divisions of the company, using different ERP and credit systems, those process models will all be different. And third, this tight binding of process activities to a SOA-defined interface to specific application systems means the process is manipulating heavyweight business objects that specify many details of no interest to the process.

All three of these problems illustrate what you could call the SOA fallacy in BPM. In theory, SOA is supposed to maximize reuse of business functions performed on backend systems. In practice, SOA has succeeded in enabling more consistent communications between processes and these systems, but the reuse as imagined by SOA architects has been difficult to achieve. The actual reuse by business processes is frequently defeated by variation and change in the specific systems that perform the services. So, instead the PDA approach seeks to maximize the actual reuse of business-defined functionality provided by services, not across different processes but across variations of the same process, caused by variation and change in the enterprise system landscape. This is a radical difference in philosophy.

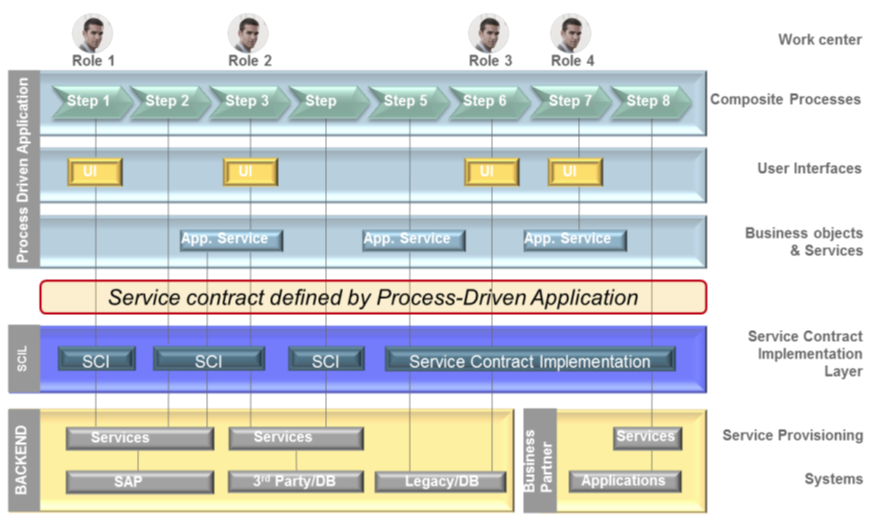

In his book, Volker Stiehl calls this new approach Process-Driven Architecture. This architecture layers the process design and removes all integration details from the business process model, representing the Process-Driven Application, or PDA. The services specified in the PDA process make no reference to the actual interfaces and endpoints of specific backend systems. Instead each service in the PDA process defines and references a fixed service contract interface, specifying just the elements needed to perform the required business function, regardless of the actual interface of the backend systems required. This service contract interface is essentially defined by the business process – by the business, not the SOA architect or integration developer.

The data elements and types used in that interface are based on a canonical data model, not the elements and types specific to a backend system. Remember, the object is not reuse of SOA endpoints and service interfaces across business processes, but reuse of this particular service contract interface across the system differences found in various divisions of the enterprise and across changes in these systems over time. Ideally the PDA process, from a business perspective, is universal across the enterprise and stable over time.

Translation from this stable service contract interface, based on canonical data, to the occasionally changing interfaces and data of real backend systems is the responsibility of the Service Contract implementation layer. What makes this nice is that BPMN can be used in this layer as well. Each integration service call from the PDA process is represented in the architecture by a Service Contract Implementation (SCI) process defined in BPMN. This process effectively binds the system-agnostic call by the PDA process to a specific system or systems used to implement the service. It performs the data mapping required, issues the requests and waits for responses, and handles technical exceptions. The PDA process doesn’t deal with any of this. Moreover, the SCI process is non-invasive, meaning it should not require any change to existing backend systems or existing SOA services. Everything required to link the PDA to these real systems and services must be designed into the SCI process.

The beauty of this architecture is that, unlike the conventional approach, the PDA process model is the same for the business analyst and the integration developer. All of the variation and change inherent in the enterprise system landscape is encapsulated in the SCI process; the PDA process doesn’t change. Effectively the executable process solution becomes truly business-driven.

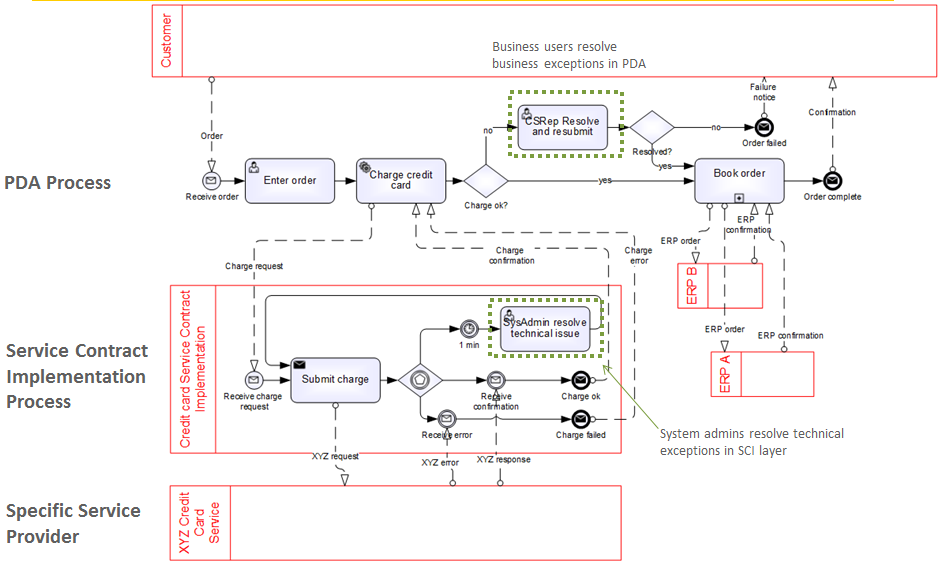

Here is a diagrammatic representation of the architecture. The steps in a PDA process, modeled in BPMN, represent various user interfaces and service calls. When the service call is implemented by a backend system, a business partner, or an external process, its interface – shown here as the Service Contract Interface - is defined by the PDA not by the external system or process. For each call to the Service Contract Interface, a Service Contract Implementation process is defined, also in BPMN, to communicate with the backend system, trading partner, or external process, insulating the PDA process from all those details. The Service Contract Interface, based on a canonical data model, defines the interface between the PDA process and the SCI process. This neatly separates the work of the process designer, creating the executable PDA process, from that of the integration designer working in the Service Contract Implementation layer. Since the PDA process and the SCI processes are both based on BPMN, the simplest thing is use the same BPM Suite process engine for both, with communication between them using standard BPMN message flows.

Here is what it looks like with our simple order booking process reconfigured using Process-Driven architecture. The details of Charge credit card are no longer modeled in a child level diagram of the business process, but instead are modeled as a separate SCI process. The charge credit card activity in the PDA process is truly a reusable business service. It defines the service contract interface using only the business data required: the cardholder name, card number and expiration date, charge amount, return status, and confirmation number. It doesn’t know anything about how or where the credit card service is performed, whether it is performed by a machine or a person, the format of the data inputs and outputs, or the communications to the service provider. All of those integration details could change and the PDA process would not need to change. The SCI process maps the canonical request to the input parameters of the actual service provider, issues the request, receives the response, maps that back to the canonical response format, and replies to the PDA service task.

If the card service is temporarily unavailable or fails to return a response within a reasonable time period, a system administrator may be required to resolve the problem and resubmit the charge. The business user is not involved in this, and it should not be part of the PDA process. This too is part of the SCI process. However, if the service returns a business exception, such as invalid credentials or the charge is declined, this must be handled by the business process, so this detail is part of the PDA. And in fact, it should be part of the business analyst’s model worked out in conjunction with the business.

This 2-layer architecture, consisting of a PDA process layer and a Service Contract Implementation layer, succeeds in isolating the business process model from the details of application integration. But there are some problems with it...

- First, the BPMN engine running the PDA and SCI process must be able to connect directly to all of the backend systems, trading partners, and external processes involved. In many large-scale processes, in particular core processes, this is difficult if not impossible to achieve.

- Second, a single SCI process may involve multiple backend systems and must be revised whenever any of them changes.

- And third, things like flexible enterprise-scale communications, guaranteed message delivery, data mapping and message aggregation are handled more easily, reliably, and faster in an enterprise service bus than in a BPMN process. So we’d like to leverage that if possible.

The stateful integration process can be long-running, meaning it can contain human tasks, it can wait for a message, or wait for parallel paths to join. The stateful integration process can process a correlation id, linking an instance of the stateful process to the right process and activity instance in PDA. The stateless ESB processes cannot do this. More on that in a minute.

To illustrate this let’s look at the activity Book Order, which books the order in the ERP system and generates a confirmation for the customer. Recall that this is what it looked like in our conventional BPMN. We have two ERP systems, and the process needs to look up which system applies to each order item before issuing the booking request. Here you can see some of the defects we have just discussed: The process model must map to the details of system A and system B; the system administrator handling stuck booking requests must be a BPMS user; etc.

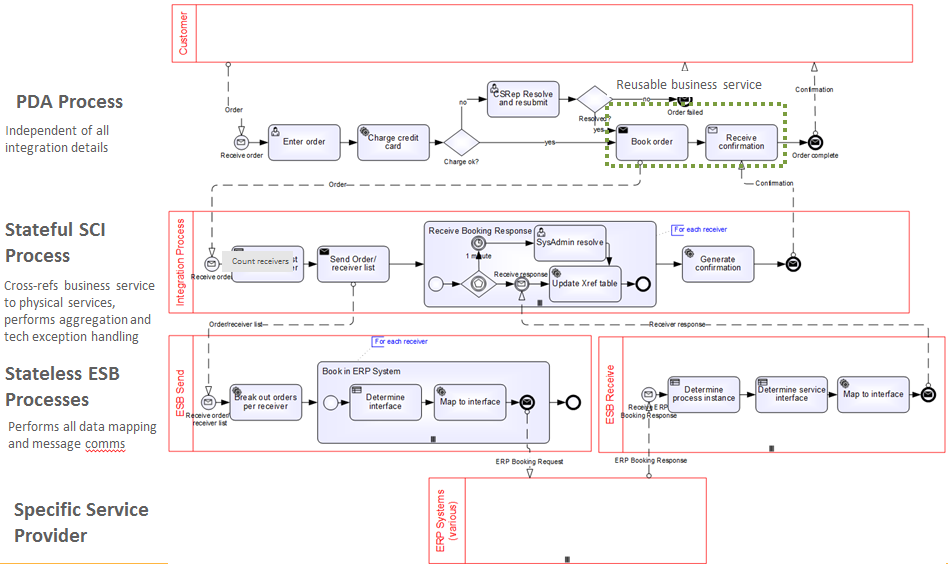

Here is what it looks like in the 3-layer PDA architecture. The PDA process is almost exactly as before. The subprocess book order is simply an asynchronous send followed by a wait for the response, a simple long-running sevice call. The request message – the order - and the confirmation response message are defined by the PDA, that is, by the business, without regard to the parameters required by the ERP systems. Book order is a reusable business service in the sense that it can be used with any booking system, now or in the future.

The messy integration work is left to the SCI layer, here divided into a stateful integration process and 2 stateless ESB processes, one for sending and the other for processing the response. Here is what they do... Upon receipt of the order message from the PDA process, the SCI process first parses the order and looks up the ERP system associated with each order item. Really it just needs a count of the receivers of the ERP booking request message, so the process knows how many responses to wait for. This could be a service task or a business rule task depending on the implementation. Let’s say this receiver list is simply put in the header of the order message, which is then sent, using a send task, to the stateless ESB send process.

The ESB has the job of dealing with the details of the individual systems. First it splits the order message into separate variables for each system, that is, for each instance of the multi-instance Book in ERP system. For each system, this activity first looks up the interface of the request message, then maps the canonical order data to the system request parameters, providing any additional details required by the system interface, and then sends the ERP booking request to the system. A basic principle of the PDA approach is that the call to the external system or service is non-invasive, i.e. it must not be modified in any way in order to be integrated with the process. The integration process must accommodate its interface as-is.

I have shown the ESB process using BPMN but typically ESBs have their own modeling language and tooling. That’s fine. Since it’s a stateless process by definition, the BPMN is not asking the ESB to do anything that cannot be done in its native tooling.

The ERP system sends back its response, which triggers a second stateless process, ESB Receive. We’ve marked this as a multi-participant pool, meaning N instances of it will be created for a single order. The ESB does not know the count. Each ERP booking response simply triggers a new instance. Now here is something interesting: correlation. We need to correlate the booking response to a particular booking request. In a stateful process you can save a request id and use it to match up with the response. But the ESB processes are stateless. The Send process can’t communicate its request id to the Receive process. So the Receive process must parse the order content to uniquely determine the order instance. The receive process must also look up the service interface of the ERP system sending the response and then map the response back to the format expected by the stateful SCI process, the same for all of the called systems.

Now back in the stateful integration process, the subprocess Receive booking response receives the message. Because it is stateful, this process can correlate message exchanges, so the message event is triggered only by a receiver response for this particular process instance. This booking service normally completes immediately, so if no response is received in one minute, something is wrong. Here we’ll say a system administrator resolves the problem and manually books the order in the external system. Even though this human intervention is required it is outside the scope of the business user’s concerns, and not part of the PDA process. This multi-instance subprocess waits for a message from each receiver. Recall that we derived the count in the first step of this process. So it is quite general. It works for any number of receivers, as long as a receiver can be determined each order item. This process doesn’t even need to know the technical details of the receiver, its endpoint, interface, or communications methods. All of that is delegated to the ESB. The stateful integration process does need to define a way to extract a unique instance id out of the original order message content, as this logic will be used by the ESB Receive process to provide correlation.

Once the ERP booking response is received, it is used to update a cross-reference table. What is that? This is a table that provides a uniform means of confirmation regardless of the physical system used to book each item. Each of those systems will provide a confirmation string in its own format. The Xref table links each system-specific confirmation string with the confirmation string for the order as a whole, in the format defined by the PDA.

One final detail before we leave this diagram... the message flows. The message flows linking PDA process to the stateful SCI process, as well as those linking the stateful SCI process to the ESB process, are standard message flows as implemented by the BPMS for process-to-process communication within the product and for reading and writing message queues. The message flows between the ESB processes and the backend systems are more flexible. The transport and message format are probably determined by the external system, whether that is a web service call, file transfer, EDI or whatever the ESB can handle. This communications complexity is completely removed from the BPMS, which is the strength of the ESB approach.

There is a bit more to it, but if you are interested, I suggest you get the book.